No source control of databases

All too often I find that development teams will meticulously source control and code-review changes to their application source code, but this process is never applied to databases. In just about every TEST, UAT and even PROD database that I work with, changes to the database schema over time will leave broken stored procedures, broken views and even orphaned child records. By broken, I mean that the stored procedure or view is relying on fields that have been re-named or removed from the underlying tables. These procedures will never run successfully until they have been identified and fixed.

Problems with a common DEV database

Changing database schemas present even an even greater problem when development teams all use a common DEV database. If one developer applies a patch to a common DEV database as part of his check-in process, it can easily break everyone else’s environment and unit tests until each developer in turn updates their code to match the latest check-in. Even worse, if this check-in has introduced a bug, and causes further unit tests to fail on a build server, then the entire team is forced to scramble and try to fix the broken build, or even roll back the offending changeset, and restore the database to allow other developers to continue working. Using a common DEV database just does not make sense.

This common database approach can also lead to serious headaches when a source tree has been branched for either a feature or as part of a production deployment. If schema changes occur on the trunk branch, this can seriously impact anyone still using the older schema on a production branch. Again, using a common DEV database just does not make sense.

Use an isolated database instance per developer

The sensible way of dealing with schema changes is two-fold.

Firstly, each developer must have their own instance of the database as a “play pen” – so that they can make schema changes or massage data without interfering with another developers working environment.

Secondly, each developer must have a quick and easy way to restore a database to a point in time – before their changes were applied. In this way, scripts that developers are writing can be re-run against a “clean” version of the database, effectively testing these scripts before checking in.

Moreover, when a source code tree is branched, the database schema and data at that time should be branched along with the source code, so that any developer working on the branch will be able to recreate a “clean” database with the schema that relates to that branch.

Include your database in Source Control

The solution to these database dilemmas is surprisingly simple. Script out your entire database and store these scripts on disk, and check them in to source control. These scripts are then used to re-create each developer’s working database. As the scripts are in source control, you now have a fully source controlled and versioned database. This gives developers the same freedoms as normal application code, with the ability to branch, merge, check-in, checkout with freedom.

The only problem is that this scripting process should be as easy as compiling source code in an IDE. That is, checkout from source, open up your IDE, compile your code and check for errors. This is where DBSourceTools comes in.

DBSourceTools

DBSourceTools is designed to help developers source-control their databases. It will script an entire database to disk. Once these scripts are on disk, they can be used to re-create the database in it’s entirety from these files on disk. Adding these scripts to source control allows developers to re-create a database as it was at a point in time in source control.

This mechanism is very similar to Microsoft Database Projects – Microsoft themselves generate scripts within a database project, and deploy these scripts ( essentially compiling the database ) under the covers.

The following diagram shows the basic usage of DBSourceTools:

1. Connect to a source database. If using an established database, then this is usually the TEST or UAT instance.

2. DBSourceTools will script all objects within this database, and (optionally) it’s data to local disk.

3. This directory structure is then committed into Source Control.

4. DBSourceTools then loads these scripts from disk, and includes any patches in the patches directory to be run after the database is created.

5. DBSourceTools then deploys the database to a new Target Database ( usually on the local SQL instance), loads all data, and applies any patches.

a. Note that this is a two step process, DBSourceTools will DELETE the target database, and then completely RECREATE it from scratch.

6. These patches can then be added to source control.

Scenario 2 :

Once added to Source Control, a second developer can re-create this database without a connection to the original source database – as all required objects and data are part of the files on disk. The following diagram shows this process:

1. Update source tree on local disk from Source Control

2. This update will fetch all required scripts, data and patches from Source Control.

3. Run DBSourceTools to load the project.

4. Deploy the target database ( usually to the local SQL instance ).

Benefits of using DBSourceTools.

All developers use their own local instance of the database.

This means that two developers can make their own schema changes to an isolated instance of the database independently of each other, and not step on each other’s toes. Data Access Layer objects can be modified, and will only take effect once both the code and the database patches are committed to Source Control.

Databases are an instant in time.

Because all database objects are scripted to disk, and DBSourceTools DELETES and then RECREATES it’s Target database, a developer can quickly and effectively RESET the database to an instant in time – before any changes were made.

This instant in time is protected by Source Control, so going back to a specific changeset means that the database can be re-created at the instant in time that the changeset was created.

Patching

Using the very simple patching mechanism, developers can easily test whether their patches will be successful, in an iterative manner:

· Delete and re-create the database from source – to an instant in time before any changes were made.

· Write sql scripts, and test it against the database.

· Bundle these sql scripts into a patch, and include it in the Patches directory.

· Delete and re-create the database in one step, including the new patch.

· Ensure that the patch worked correctly.

Merging changes from other developers.

When patches are added to source control from other developers, it is a simple matter of updating the patches directory with their changes, and re-deploying the database. DBSourceTools will run all of the patches in one go – thereby checking to see whether your patch works correctly with new patches committed by other developers.

If your patch does not work correctly because of other patches, you can easily modify it, re-run it again and again before checking into source control.

TEST, UAT and PROD patching.

If you are unsure whether a patch will work correctly on TEST, UAT or PROD data, then it is a simple matter of getting a backup of any of these databases, scripting them to disk, and running through the patches as normal.

Database compilation.

By re-building a database from source files, errors in a database schema can be quickly found. As databases evolve over time, quite often stored procedures or views become un-usable because they are targeting fields or tables that have been removed or deleted. By re-building a database from scripts, these problems can be quickly identified and resolved.

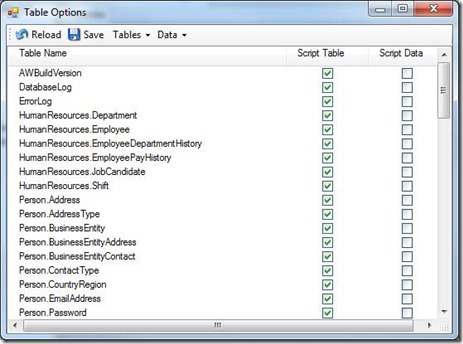

Data included

DBSourceTools has powerful options to select which data should be scripted to disk. Select all tables, or just “configuration” tables when scripting a database to disk. DBSourceTools uses the SQLBulkCopy routines, and can load millions of records in a matter of seconds.

By including data with your database, applications can be load tested with data volumes, or debugged against PROD or UAT data – all within the safety of a local SQL instance.

Step-by-step Tutorial

Let’s go through the process of using DBSourceTools in a step by step manner. We will start with a blank database, and then use the patching mechanism to create some tables and insert some data.

Create a blank database

To start off with, create a blank database on your local SQL instance – using SQL Management Studio, and call it TutorialDb_0.0.

Loading a Source Database

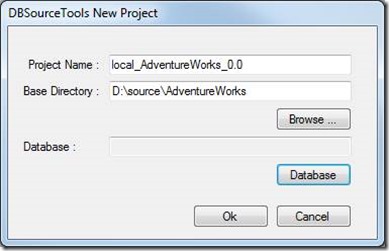

Now fire up DBSourceTools, and select New Project. This project will need a name, which we have specified as Tutorial_Project_0.0, and it will need a base directory on disk – which we have chosen to be d:\source\TutorialDb:

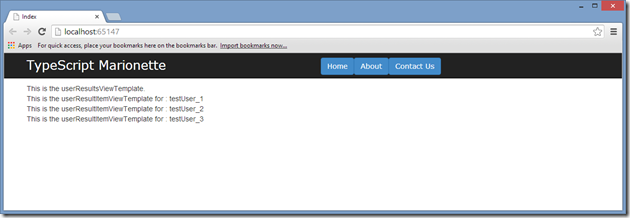

Now click on the Database button. This will give you the following database screen:

A database Nick Name can be anything, but nick names must be UNIQUE across a project. I prefer to use the source server name as the prefix, then the database name, and then a version number. If you were scripting this database from a TEST environment, then I would name this database TEST_TutorialDB_0.0, or if from PROD, then PROD_TutorialDb_0.0.

You can connect to any server, use Windows Auth or SQL Auth. Once you have selected an Authentication scheme, click on the Databases button to bring up a list of databases on that server, and select which one you require.

Then click on the Test Connection button. The Test Connection button simply checks to see whether you have the correct permissions on the source database to allow for scripting. If not, you will need to modify the permissions on the source database to allow for db_owner privileges.

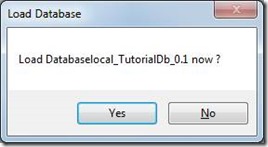

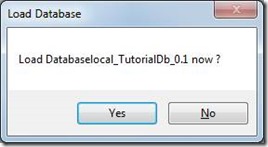

Once the connection has succeeded, click on OK, then Ok again. Your source database connection is now setup. DBSourceTools will then prompt if you would like to load the database now. Click Yes.

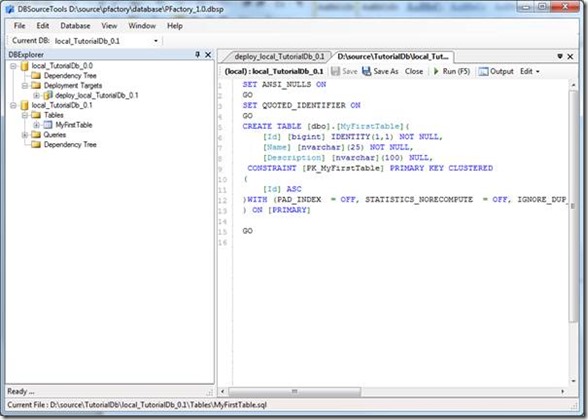

Once the load process is finished, you will see your source database on the DBExplorer panel on the left hand side of the screen.

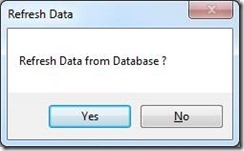

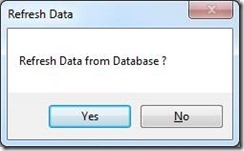

It is a good idea to save this project at this stage, so Click on File | Save Project, and then click on No when DBSourceTools asks you if you would like to Refresh the data.

Creating a Target Database

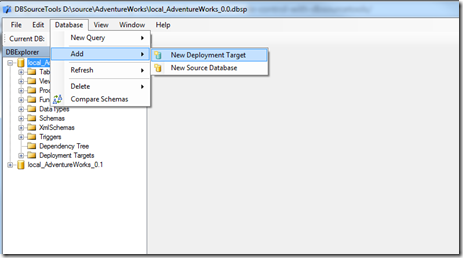

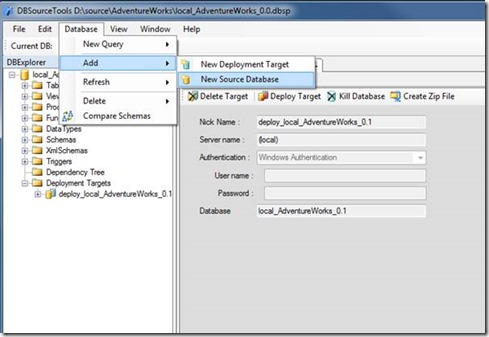

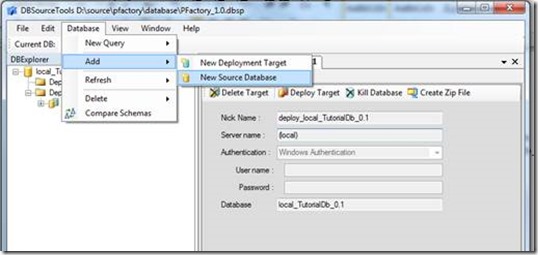

Our database does not have anything in it as yet, but let’s create a target database so that we can start using the patching engine. Click on Database | Add | New Deployment Target

This brings up a similar database connection dialog as follows:

The only required fields on this screen are Nick Name, and Database. Note the naming convention for the Nick Name. I always prefix a deployment Target database with the word deploy, followed by the servername, followed by the database name, and an incremented version. Again, these nick-names must be UNIQUE across a DBSourceTools project.

It is not necessary to click on the Databases or Test Connection buttons when creating a Target Database.

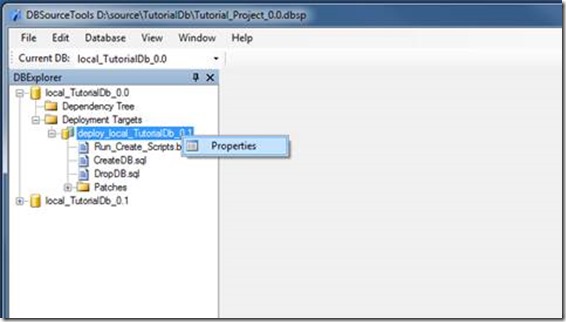

Clicking OK here will create a deployment target database that is under the tree structure of your original source database. Expand the tree until you can see this new database.

Again, remember to Save the project now.

Deploying the Target Database

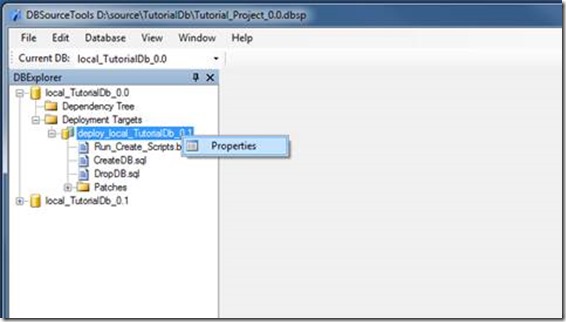

To deploy our Source database to our Target database, right-click on the Target database, and select Properties:

This will bring up the Target database properties in the panel on the right hand side:

Click on the Deploy Target button. This button will open a new window, and execute the Run_Create_Scripts.bat file which is on disk, and is a child of the deploy_local_TutorialDb_0.1 directory:

This new database (local_TutorialDb_0.1) should now be created on your local SQL server.

Creating Patches

Now that we have deployed our source database to the target database, we can start creating patches. These patches will be attached to our Source database – under the patches directory of the deployment target. When writing and creating patches, I always find it handy to have both Source and Target database available within the same project.

Add your target database as a source.

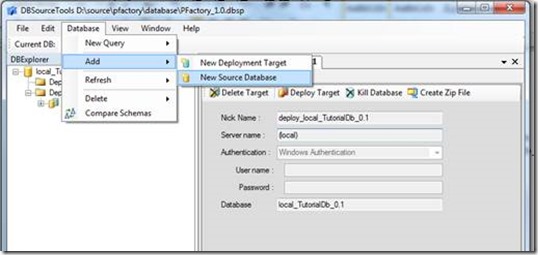

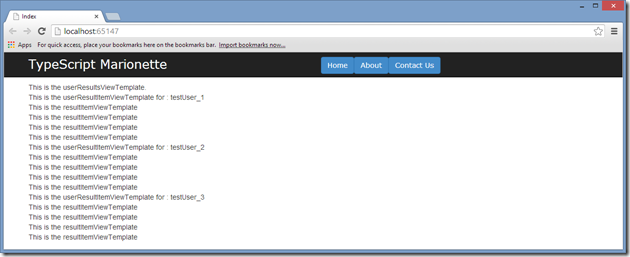

Click on the Database | Add | New Source database to create a new Source database within the same project:

This source database will actually be the Target ( local_TutorialDb_0.1) database that we deployed earlier. Use the New Database Connection dialogue to specify this as the source database:

Note that I have used the same naming convention for source database Nick Name as earlier : machine name, database name, version number. We can also click on the Databases button to select the local_TutorialDb_0.1 database from the available list, and then we need to click on Test Connection before the OK button will become available.

Once you have hit OK, Click on Yes to load the database into DBSourceTools.

This will load the new database as a source database, and include it in the DBExplorer:

Remember that our source database is the one at the top, and has a version number of 0.0. The database that we are deploying to is on the bottom, and has a version number of 0.1.

Creating and Scripting Tables.

This new source database (local_TutorialDb_0.1) is now a “Playground” instance that we can use to create tables, insert data, or generally design our new database in. Once we have made changes to this database, we will need to ensure that we create patches from these new tables, views, etc, and include them in the Patches Directory of our deployment target.

Deploying from a source database ( 0.0 ) to a target database ( 0.1 ) will completely delete the target database before re-creating it and running patches. So remember that the “Playground” database can be wiped clean at any stage, and you can start from scratch if you make any unrecoverable mistakes.

You can create a new table using SQL Management Studio , or simply by running sql scripts, or in whatever way you like.

Once created, though, make sure that you use DBSourceTools to script your database tables. When scripting tables from SQL Management Studio, the generated scripts DO NOT include any indexes that you may have created on the table, or in fact any related objects. You will need to generate scripts for your indexes in a separate step, and then combine these scripts to re-create your table successfully. This is obviously error-prone and time-consuming.

DBSourceTools will script tables, indexes and any related objects in one step.

As an example of this, let’s create a new table using DBSourceTools.

Create a Table

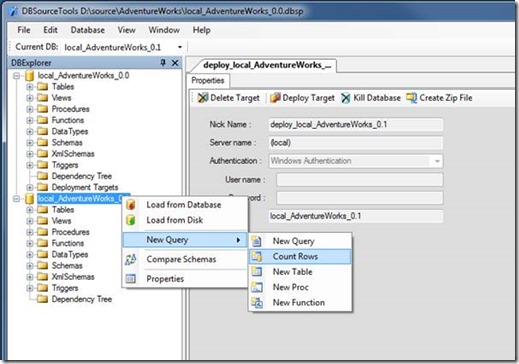

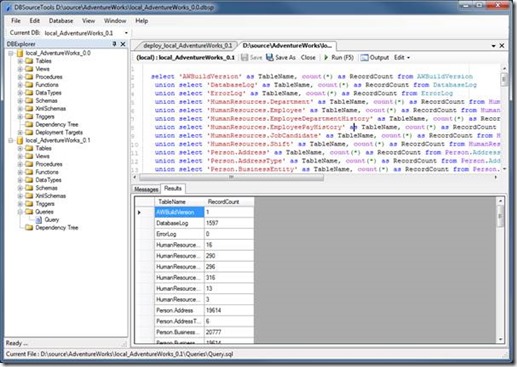

Right-click on the 0.1 version of your database, then select New Query, New Table to generate a sample script for creating a new table:

The resulting script has the basics of the sql that you will require in order to create a new table. Note that most of the script is just a list of commented SQL datatypes, inserted as handy reference should you want to refresh your memory on how to use different datatypes.

At the bottom of the script is line to add a constraint for a primary key – don’t forget to fill in the blanks here – all tables should have a primary key !

Modify the script to look something like this:

CREATE TABLE [dbo].[MyFirstTable](

[Id] [bigint] identity(1,1) NOT NULL,

[Name] [nvarchar](25) NOT NULL,

[Description] [nvarchar](100) NULL,

CONSTRAINT [PK_MyFirstTable] PRIMARY KEY ( [ID] ASC )

) ON [PRIMARY]

GO

Executing queries

To execute the current SQL query against the current database, simply it F5.

Saved Queries

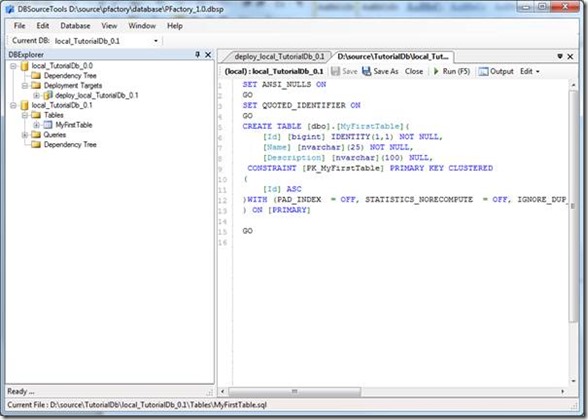

Notice the DBExplorer on the right-hand side. DBSourceTools has created a new Queries directory under local_TutorialDb_0.1, and saved your create table script as Query.sql. This Queries directory will be used for any scripts that DBSourceTools creates – so is a quick and handy way of going back to older scripts.

Any Query under the queries directory will be run against it’s parent database by default, so is a handy “ScratchPad” area to use when working with and running scripts.

Reloading from Database

All well and good so far, but our new table has not appeared in the DBExplorer tree view as yet. This is because DBSourceTools by default loads databases from Disk, not the database. What we will need to do now is to refresh what is on disk with what is actually in the database. To do this, right-click on the source database, and select Load from Database:

This will refresh the database structure from the updated database.

Once this is complete, you will see the MyFirstTable appear under the Tables node of the database.

Clicking on the expand tree icons, you will notice that DBSourceTools adds some handy features when working with database objects.

Firstly, double-clicking on the table name will bring up a source code view of the table definition. Secondly, the table has a Data icon. Double clicking on this data icon will open up a new window, and immediately show all data in the table. In SQL Management Studio, this is a two-step process – you need to right-click on the table and then click select top(1000) to have a quick view of your data.

Thirdly, there is a Fields icon, and expanding this will show a list of field names and their data types.

To view the SQL script definition of the table, simply double click on the table name:

Inserting Data

You can insert data into a table in whatever manner you choose, but DBSourceTools can also help generate a sample script. Right-click on the table, and select Script Insert. The generated script will provide enough information to be able to simply fill in the blanks:

We can modify this script pretty easily to insert two records into MyFirstTable:

insert into MyFirstTable(

/*[Id] bigint primary key (identity) */

[Name] /* NOT NULL */

,[Description]

)

values (

/*[Id] bigint primary key (identity) */

‘First name’ /*Name NOT NULL nvarchar */

,’First description’ /*Description nvarchar */

)

insert into MyFirstTable(

/*[Id] bigint primary key (identity) */

[Name] /* NOT NULL */

,[Description]

)

values (

/*[Id] bigint primary key (identity) */

‘Second name’ /*Name NOT NULL nvarchar */

,’Second description’ /*Description nvarchar */

)

Now hit F5 to run the script.

Viewing and Scripting Data

Double click on the data icon in the DBExplorer under the MyFirstTable icon:

This will bring up the data view, showing all records currently in the table. From here we can easily create an insert script to include this data in a patch. Simply click on the Script Data button in the Data Window. The generated script will automatically set identity insert on and then off to preserve our identity seed on the Id column, and also set a nocount on for running the script:

SET NOCOUNT ON

SET IDENTITY_INSERT [MyFirstTable] ON

insert into [MyFirstTable] ( [Id],[Name],[Description] ) values ( 1,’First name’,’First description’ )

insert into [MyFirstTable] ( [Id],[Name],[Description] ) values ( 2,’Second name’,’Second description’ )

SET IDENTITY_INSERT [MyFirstTable] OFF

SET NOCOUNT OFF

This script and the table definition are now ready for patching.

Creating patches

To include our new database table definition, and it’s data in a deployment step, we will now create two patches under the patches directory of our original source database. Use the DBExplorer window to expand the tree as follows: local_TutorialDb_0.0 > deployment targets > deploy_local_TutorialDB_0.0 > Patches.

Right-click on the patches icon, and select new patch:

Fill in the patch name. Note that patches are loaded alphabetically, so make sure that you number your patches. We will create a Patch_001_table_MyFirstTable as follows:

Create a second patch using the same process, and call this patch Patch_002_data_MyFirstTable.

Double clicking on a patch will open up the script in an editor window. So edit the Patch_001_table_MyFirstTable, and copy the definition of the MyFirstTable into it. Remember that double-clicking on any table name will bring up the database script used for the table – so find the table MyFirstTable, double-click on it, and copy the create script. Paste it into Patch_001, and save the file.

Edit the Patch_002_data_MyFirstTable by simply double-clicking on it. Copy and paste the insert script created into the previous step into this patch, and save the file.

Including the patches in the deployment script

DBSourceTools uses a simple batch file to run the deployment scripts, load tables and data, and run the patches. The file that it uses is called Run_Create_Scripts.bat, and lives as the first file underneath the deployment target database. Double-click on this file to see what it contains:

set DB_BASE_DIR=D:\source\TutorialDb\local_TutorialDb_0.0\

set BASE_BIN_DIR=C:\Program Files (x86)\DBSourceTools\

set PROJECT_BASE_DIR=D:\source\TutorialDb\

set PATCH_DIR=D:\source\TutorialDb\local_TutorialDb_0.0\DeploymentTargets\deploy_local_TutorialDb_0.1\

rem

sqlcmd -S (local) -E -i %DB_BASE_DIR%DeploymentTargets\deploy_local_TutorialDb_0.1\local_TutorialDb_0.1_DropDB.sql

sqlcmd -S (local) -E -i %DB_BASE_DIR%DeploymentTargets\deploy_local_TutorialDb_0.1\local_TutorialDb_0.1_CreateDB.sql

As we can see, this file is just setting some global variables, and then running a DropDB and CreateDB script. We now need to update this script to include our new patches.

This process is called Writing Targets. Using the DBExplorer, right-click on the Deployment Targets node of the source database, and click Write Targets.

This process also includes the option of refreshing data from the source database. At this time our source database is blank, so we can safely say No here.

Once this process has finished, open up the Run_Create_Scripts.bat file again. If you already have this file open, you may be viewing the in-memory version of this file, so it is always safer to close the file first, and then re-open it by double-clicking on the file.

Note how DBSourceTools has added our two patches at the bottom of the script:

sqlcmd -S (local) -E -i %DB_BASE_DIR%DeploymentTargets\deploy_local_TutorialDb_0.1\local_TutorialDb_0.1_DropDB.sql

sqlcmd -S (local) -E -i %DB_BASE_DIR%DeploymentTargets\deploy_local_TutorialDb_0.1\local_TutorialDb_0.1_CreateDB.sql

sqlcmd -f 850 -S (local) -d local_TutorialDb_0.1 -E -i "%PATCH_DIR%Patches\Patch_001_table_MyFirstTable.sql"

sqlcmd -f 850 -S (local) -d local_TutorialDb_0.1 -E -i "%PATCH_DIR%Patches\Patch_002_data_MyFirstTable.sql"

This Run_Create_Scripts.bat file is at the heart of the deployment process. Any time that we deploy a target database, this script will be run. Make sure that whenever you add patches or change options on the source database, you remember to do the Write Targets step to update this file.

Killing databases.

To re-deploy our database including our new patches, simply right-click on the target database and select properties. This will bring up the database properties screen, where you can hit the Deploy button to start the deployment process.

If your script hangs or gives errors during the database drop step, it may be that there are still connections open to the target database, which will interfere with the drop command. To close all existing connections and drop the database in one step, simply click on the Kill database button.

Let’s use this kill step, and then re-deploy the database:

Right-click on the deployment database named deploy_local_TutorialDb_0.1, and select Properties.

This will bring up the Properties window, with the Deploy Target and Kill Database buttons on the menu bar:

Go ahead and click on the Kill Database button to close all existing connections to the local_TutorialDB_0.1 database, and drop it in one step.

We can now hit the Deploy Target button to run the Run_Create_Scripts.bat file and re-create the database:

Check to see that there is no red text in the output window – if there is, then something has gone wrong with the deployment.

Checking output results

DBSourceTools keeps a copy of each deployment in a text file in the same directory as Run_Create_Scripts.bat If we fire up an explorer and navigate to this directory, we will find a DeployResults_ file for each deployment. The contents of this file are an exact copy of the output window above. If you encounter errors, then have a look at these files as a record of each of your previous deployment runs.

Verify the Target Database.

Once we have completed the deployment step, we can re-load our new database from the database, just to ensure that we still have all of our tables and data loaded correctly. To do this, right-click on the local_TutorialDB_0.1 database in the DBExplorer view, and select load from database.

Expand the nodes of this database to ensure that it contains the MyFirstTable, and then double click on the Data icon to check that this table has data.

Add files to Source Control

The last step in this process is to add files to your Source Control engine.

Sharing your database

DBSourceTools uses full path names for project files and script names. Unfortunately, this means that all developers must have the same path names on their machines in order to share databases. This can be easily accomplished by substituting the same drive letter on each developer machine. Drive substitution is different to mapping a network path, and is accomplished using the subst command in a DOS prompt.

Lets assume that developer 1 stores his source code in the following location:

C:\users\dev1\source\

And developer 2 stores his source code at:

C:\source

If the DBSourceTools base directory has been set at d:\source\TutorialDB, then we will need both developers to have the same d:\source\TutorialDB directory structure.

This can be easily accomplished by using the substitute command to substitute a virtual d: drive to c:\users\dev1. Run the following command in a DOS prompt

subst d: c:\users\source

If substitutions are necessary for your developer machine, then you can easily create a quick batch file to do this substitution, and run it on startup.

The same substitution on developer 2’s machine would simply be

Subst d: c:\

This ensures that the directory used by DBSourceTools is the same across both machines: d:\source\TutorialDb.

Summary

In this tutorial, we have used DBSourceTools to start with a blank database, deploy it to a target database, modify the target database, and then reverse-engineer our changes back into patch scripts.

At the end of this process, we can simply hand over the patches to a DBA who will be able to re-create our shiny new database on TEST, UAT and PROD boxes. DBA’s generally create databases themselves, as the disk space requirements and subtle tweaks needed in each environment are slightly different, and they would know best. So DBSourceTools can be used to help write these scripts.